“Which animals have the capacity for conscious experience?”

This is how the New York Declaration on Animal Consciousness opens, signed last month with a current total of 276 signatures from researchers across fields as diverse as philosophy, psychology, evolutionary biology, neurology, and, of course, animal consciousness.

The Declaration states that there are three broad points of agreement when it comes to the question of which animals experience consciousness, namely, that:

1) “There is strong scientific support for attributions of conscious experience to other mammals [than humans] and to birds”;

2) “The empirical evidence indicates at least a realistic possibility of conscious experience in all vertebrates (including reptiles, amphibians, and fishes) and many invertebrates (including, at minimum, cephalopod mollusks, decapod crustaceans, and insects)”; and

3) “When there is a realistic possibility of conscious experience in an animal, it is irresponsible to ignore that possibility in decisions affecting that animal. We should consider welfare risks and use the evidence to inform our responses to these risks”.

I want to start by focusing on the first two points. The topic of consciousness has been a matter of philosophical, theological, and scientific debate for thousands of years. In the 4th century BCE, Aristotle distinguished between different levels of the “soul”, from the “nutritive” (plants), through the “sensitive” (animals) to the “rational” (humans). In the 17th century, René Descartes famously posited the idea of “mind-body dualism”, proposing that the soul is separate from its bodily substrate – an idea which has (for better or worse) influenced Western philosophical thought ever since.

Solving the mystery of consciousness is one of the greatest unanswered questions in contemporary science, and still causes the scientific equivalent of rap beef between competing theories. It poses significant problems for entire fields of study, as Erik Hoel outlined earlier this year in his excellent article exploring how neuroscience is pre-paradigmatic. The New York Declaration is another milestone on our long and arduous journey towards finding the answer.

If you want to understand anything – whether that’s the mating behaviour of peacocks, the political systems of feudal Europe, or, indeed, consciousness – then it’s useful to know how it evolved. In the same way that a model of biological reproduction can be built upon the understanding of the evolution of DNA from organic molecules like sugars and proteins, so too can a model of consciousness be understood from the evolution of consciousness from simple nervous systems. In fact, until you have the model, it’s difficult build the mathematical theory – the theory of DNA famously first required Crick and Watson’s conceptualisation of a double helix structure.

What follows is not an empirical dissection – this article is a conceptual investigation, the theoretical exploration that comes before the acquisition of empirical data. This is the part of science which is “more art than science”.

But before we get to how consciousness might have evolved, it’s important to make sure we’re all talking about the same concept of consciousness, given that even its definition is not universally agreed. So, let’s start here: how do we define consciousness?

What is Consciousness?

The definition of consciousness has been long-debated, but one of the most widely-cited definitions – and, for me, the most compelling – can be found in Thomas Nagel’s famous 1974 paper “What Is It Like to Be a Bat?”:

“An organism has conscious mental states if and only if there is something that it is like to be that organism — something it is like for the organism.”

This definition is distinct from the concept of the mind – the mind encompasses all of the aspects of mental function, including memories and unconscious processing. Conscious experience is therefore a subset of the entire broad scope of mental processes which make up the mind.

It is also different from how people typically conceive of consciousness – for most non-scientists, the term “consciousness” is synonymous with “self-awareness”. However, using Nagel’s definition, consciousness is much more fundamental. It’s not about self-awareness, it’s about the continuous feedback loop of perception and information and decision and adjustment and action and perception again which makes up the stream of conscious experience. The stream is consciousness.

For us humans, consciousness certainly involves being aware of one’s own thoughts, motives and feelings – self-awareness – but there is no reason to infer that this is a necessary component of consciousness. In fact, if you strip away many of the familiar aspects of the experience of human consciousness – problem solving, feeling, seeing, hearing – it is possible to imagine a conscious experience which is not self-aware, has minimal awareness of its own thoughts and feelings, processes a more limited range of sensory information about its environment, and yet still experiences the pared down stream of consciousness. There is still something it is like to be that organism.

I find it helpful to imagine all of these aspects of the conscious experience in terms of a gradient of consciousness. At one end, you have creatures like humans, orcas, and elephants, in all our advanced intelligent, socially intelligent, empathic glory. At the other end you have those aforementioned molluscs, which are still experiencing and interacting with the environment around them but are much less able to perform advanced cognition (thinking, planning, decision-making) – or meta-cognition (awareness and adjustment of their own thought processes).

Different consciousnesses evolved to interact with different environments. Living in the shifting sands of an ever-changing social environment places a selective pressure on being able to understand the behaviour of your peers and relatives. The fact that self-awareness in social creatures like primates and elephants evolved at all is likely due to these pressures – it’s an incredibly complex thing to do. This ability, to understand that other creatures have their own thoughts, feelings, and desires is called “theory of mind”. In humans, theory of mind first emerges in infants at around 6 months old and continues developing all through childhood and adolescence (such is the complexity of understanding the minds of other humans). The other place theory of mind has likely evolved is in ambush predators like crocodiles – again, the selective pressure on understanding the behaviour of prey species drove the development of this advanced inter-species social ability.

On the other hand, the selective pressures acting on a mussel to develop enhanced cognition are much more limited – but that does not mean that there is nothing that it is like to be the mussel. Feeling full from digesting too much food when there are high algae levels in the environment and slowing down its water filtration to compensate; detecting compounds released from a neighbouring mussel which has been damaged and feeling the muscle-tightening sensation of fear, clamping the shell closed for protection; secreting sticky threads to attach to rocks until it senses that it is secure enough to avoid getting swept away by water currents.

Other arguments

There is a good deal of opposition to Nagel’s definition, of course, even within the scientific community, as it implies that consciousness is a much more widespread phenomenon than previously imagined.

Some of this opposition is political, as alluded to in the third point of the New York Declaration, because taking consciousness into consideration on welfare decisions will have inevitable downstream consequences on any animal-based industry. It would also require reframing all our interactions with the natural world – and there are plenty of vested interests who would prefer to avoid another level of bureaucracy. Other opposition is ideological, perhaps based on religious texts or concepts of human exceptionalism.

It is hard to reason with political or ideological opposition to the idea of animal consciousness, but some of the opposition is philosophical in nature. If you treat science or philosophy as purely inductive – drawing conclusions based on observable data – then the only thing that we know for certain to be conscious is the human mind. It is, after all, the only consciousness we can directly observe. The solipsists among you might extrapolate on this single data point and say, therefore, that other minds – and even the external world – cannot be known and might not even exist. This is the essence of the simulation hypothesis. However, when you include deductive reasoning – logically drawing conclusions based on general principles – it is possible to present testable hypotheses about the world around us which are more useful in scope.

Occam’s razor states that “the hypothesis which has the fewest assumptions is most likely to be correct.” This philosophical “razor” – or principle for “shaving” away unlikely theories – has been used to underpin much modern science. It played a crucial role in the development of, among others, the heliocentric model of the solar system (as opposed to the earlier geocentric model), the germ theory of disease (as opposed to “bad air”), and even the theory of evolution by natural selection (as opposed to ideas of creation).

At first glance, it appears that the simulation hypothesis’s position contains no assumptions – it simply uses the evidence of one’s own conscious experience. But, on closer inspection, it raises many more questions than it answers: if your conscious experience is being simulated, then what’s doing the simulation? There are a whole host of unspoken assumptions underlying this limited interpretation of the single data point of evidence.

If, instead, we deduce that the world exists independently of our conscious experience, we can use a whole range of further observations and evidence to build a clearer picture of the nature of reality – including the subset of reality we call consciousness.

Given that the substrate of your individual human consciousness is, based on all available evidence, your individual human brain, it is reasonable to infer that all alive, awake humans possess conscious experience. But if we stop there – and say, therefore, that we must conclude that only humans are conscious, then we are making the assumption that consciousness only evolved in the last 6 million years, since our divergence from chimpanzees. This assumption is not supported by the overwhelming evidence of self-awareness – what many people consider to be the cornerstone of human conscious experience – which can be found in animals like chimps, dolphins, elephants, magpies, and other social animals – even dogs.

We might then instead make the assumption that consciousness is a property of social animals and therefore appears in multiple species as a result of convergent evolution – like how bats (a family of mammals), birds (a family of dinosaurs), and bees (a family of insects) all evolved wings independently from each other. But this makes an unspoken assumption about the nature of consciousness as well – that it is dependent upon self-awareness. And, as I argued above, self-awareness is not a requisite for experiencing the world around you.

By understanding the selective pressures acting upon the evolution of our ancestors, and the evolutionary timeline of the animal nervous system, I believe it is possible to make a different assumption about the nature of consciousness.

My hypothesis

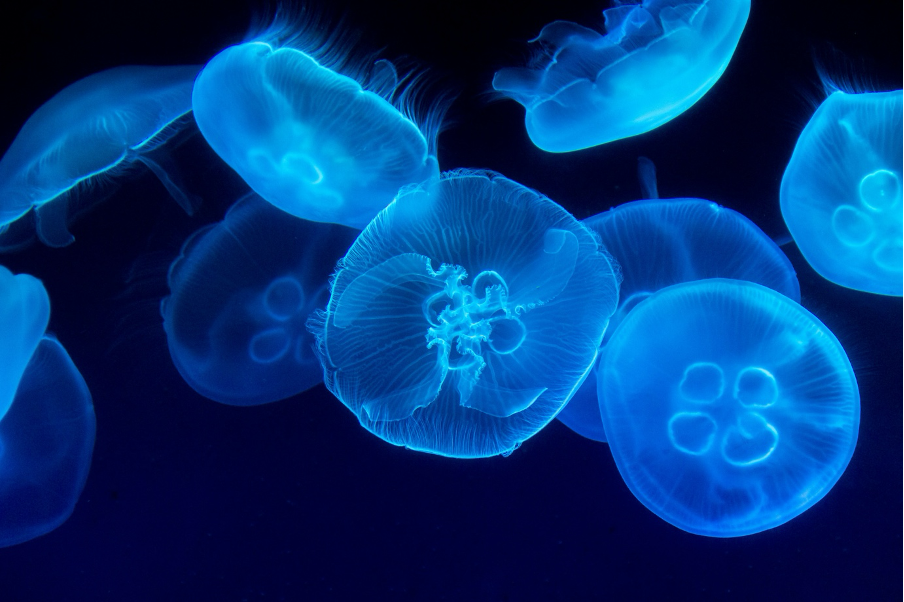

In the spirit of Occam’s Razor, I am proposing a hypothesis which only requires a single assumption – that, as defined by Thomas Nagel, consciousness is an emergent property of the animal nervous system. This assumption implies that consciousness evolved alongside the first nervous systems in primitive relatives of modern jellyfish, over 580 million years ago, and that everything else we see and understand as part of our conscious experience is variation in scope and scale.

This means that there is something that it is like to be a jellyfish.

Let’s start by exploring the branches of the tree of life which split from our ancestors before the evolution of the nervous system and see if this hypothesis holds up.

What ISN’T Consciousness?

A good place to start unpacking this is with chemotaxis in bacteria – the movement of the organism in response to a chemical stimulus.

For any organism, undirected movement is less advantageous than directed movement, but in order to have directed movement it is necessary to sense your environment. In bacteria, this process of directed movement – chemotaxis – is triggered by receptors on the outside of the cell membrane which, when bound to specific chemicals, begin a chemical signalling cascade within the cell. Roughly speaking, if an attractive chemical – like sugar – is sensed, then the cascade triggers anticlockwise rotation of the bacterium’s flagella (the tiny tails that enable it to swim). This causes the flagella to bundle together and create a “run” of forward movement; in contrast, if a repellent chemical is sensed, the chemical signalling cascade causes clockwise rotation of the flagella, which breaks the bundle of flagella apart, causing the bacterium to “tumble” and randomly change its three-dimensional orientation and then move away from the repellent chemical.

These chemical cascades that lead to the bacterium’s movement are contained within the cell membrane of the individual bacterium, which is a finely balanced chemical machine, maintaining the levels of chemicals inside it in a series of intricately interconnected chemical reactions. These chemical reactions keep the bacteria alive and reproducing — all using chemistry which is now well-understood by scientists. Life is, ultimately, just very advanced chemistry. Which, in my opinion, makes the evolution of the overwhelming, rich, all-consuming stream of consciousness we experience every waking moment all the more incredible.

In bacterial chemotaxis, the functional biological machinery is set up to respond directly to the stimulus without ever generating awareness. There is no meta-process monitoring the progress of the machinery, or its progress through the outside world – it’s a cellular Roomba, continually updating its position based on its immediate environment using automated biochemical scripts.

That’s the case for all single-celled organisms, but even multicellular colonies do not necessitate consciousness. A multicellular organism like a tree communicates between its various body parts, as some way of communicating between cells is necessary to exist in a multicellular fashion (at the very least, to maintain body shape). But does a tree’s branch have any awareness of what is happening in its root?

The cells in the branch may receive varying degrees of nutrients depending on what’s taking place in the roots and, individually, the biochemical processes operating within each cell adapt to such information, but this is just a larger, multicellular version of the machinery which operates within a single bacterium. There is no central coordinated information system perceiving and integrating information within the tree which gives rise to the experience of both branch and root. There is no nervous system.

This doesn’t stop trees from doing some impressive things like communicating between different individual organisms through the subterranean fungus-powered mycorrhizal network, using nutrient transfer and even using biochemical and electric signals (side note – if you want to hear what the electrical signals of a mushroom sounds like, it’s worth a listen). But electrical communication does not require consciousness either – this, after all, is more or less how computers communicate with each other (dial-up sounds for comparison).

But what about fungi? They are, after all, the closest multicellular relatives of animals, having diverged from our lineage over 1 billion years ago. Could it be possible that there is, in fact, consciousness contained within those intricate electric firing patterns of fungi? This is an interesting question, but it runs into the following problem. There are zero fungi we can be reasonably sure experience consciousness, compared with the previously mentioned multiple animal species which exhibit the apex of conscious experience, self-awareness. As such, this is a question that we can only properly answer after we have developed an understanding of consciousness in animals and are then able to extrapolate it to other places. It is not a question which helps us develop a framework for understanding consciousness in the first place, so it’s one best left until later — just like the questions about how genetic information was stored and transmitted, which were largely speculative until Crick & Watson’s 1953 discovery of the double helix structure of DNA provided the framework with which to answer them.

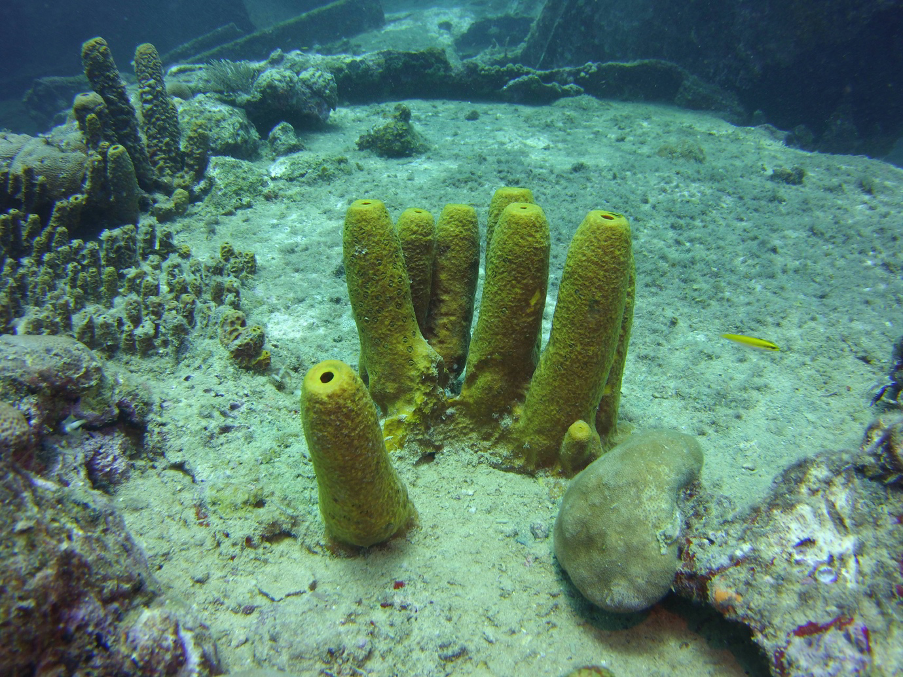

Moving along the tree of life, we come to sea sponges – the most basic multicellular animals, which split from all other animals between 600 and 800 million years ago.

Sponges lack digestive tracks, nervous systems, or muscular systems. You could say that they are an organism which is comprised of a single organ. They are, essentially, a multicellular colony of single-celled choanoflagellates (the closest relative of the last common ancestor of all animals). Because they are a colony of single cells, you can literally blend a sea sponge and the cells reaggregate into the fully formed organism.

Because of this, I can confidently say that, just as a bacterium undergoing chemotaxis does not have an internal experience, a sea sponge doesn’t feel — because there is nothing doing the feeling.

But then, evolution caused the development of something utterly profound.

The evolution of the nervous system

My assumption – that consciousness as we understand it is an emergent property of the animal nervous system – implies that consciousness must predate the Cambrian explosion, that incredible, sudden diversification of complex life which began 538.8 million years ago. The Cambrian explosion led to the emergence of most modern animal phyla (the groups of related creatures which branch off the tree of life), including arthropods, worms, molluscs and chordates (the ancestors of vertebrates, which eventually evolved into you and me).

Though we cannot be certain when the first nervous system evolved, the oldest surviving phyla which possess a nervous system are cnidaria (pronounced nigh-dare-ear; the c is silent) – the family which includes jellyfish. This group branched from other animals around 600 million years ago, implying that primitive nervous systems predate the diversification of animal life in the Cambrian explosion. Jellyfish typically do not possess the centralised nervous systems found in other animals, but instead possess diffuse “nerve nets” – interconnected nerve cells lacking a distinct brain.

In my opinion, this marked the very first step on the gradient of consciousness to self-aware social animals like humans. Consciousness is an emergent property of the nervous system, like how a molecule is greater than the sum of its component atoms; a cell is greater than the sum of its component molecules; a plant is greater than the sum of its component cells; human civilisation is far greater than the sum of its individual humans.

The nervous system enables coordination between body parts in a much more rapid manner than is possible using nutrient transfer or chemical signalling – but controlling movement alone does not make a system conscious. A simple, biomechanical sensory system – like sunflowers following the sun – enables responsiveness to the environment. This in itself is highly adaptive, but there is nothing that is it like to be a sunflower. And there are two reasons why not.

When approaching something from an evolutionary perspective, it’s useful to consider its “purpose” – why did it evolve in the first place? What selective pressures acting on the system caused the evolution of a specific feature? Did it evolve because it was clearly adaptive, like the ability to detect light, or as a side effect of the development of another feature, like nipples in male humans?

The “purpose” of consciousness – the reason it was so adaptive for the first organisms in which it developed as an emergent property of primitive, coordinating nervous systems – is that it enables the organism to navigate much more successfully through space and time. To traverse the external environment not by chance, but with deliberate direction.

Perception

The first component of consciousness that is specifically enabled by the nervous system is the possibility of receiving immediate feedback on the organism’s movement through 3-dimensional space. Not just moving, but sensing, perceiving the movement, and adjusting future movement as a response. Self-direction. The evolution of sensory organs, like the basic light sensing “eyes” found in jellyfish, provided additional information about the external environment to the conscious experience embedded in the nervous system. This further improved the organism’s ability to perceive, react, and act upon the external world — and this is highly adaptive.

This creates the feedback loop of perception and action which I believe underlies the experience of consciousness in animals. This is, broadly speaking, based on the Interface Theory of Consciousness, first proposed by Donald Hoffman: that our conscious experience is not a reflection of objective reality, but rather a subjective interpretation of the world that is shaped by our nervous system’s attempt to make sense of the sensory information it receives. More recently, Anil Seth has done some great work on predictive processing – how conscious experiences of the world around us emerge as forms of perceptual predictions. It’s well worth checking out his recent Michael Faraday Prize Lecture.

This perception-action interface, which first evolved in the early iterations of the animal nervous system, gives animals the ability to represent the world within their conscious experience in order to navigate the environment more successfully.

Of course, rather than the multifaceted, rich experience of the external world which we experience thanks to our highly evolved nervous system, primitive jellyfish nerve nets don’t allow for very accurate perception of the external environment. Nerve nets confer a small amount of control, enough to be adaptive, but the information they receive through their rudimentary nervous systems is lacking sufficient clarity – sufficient resolution – to be able to respond with any degree of precision. As an example, jellyfish are able to respond to physical contact but cannot accurately determine the direction of the source of the stimulus.

Later cephalisation (the evolution of a “head”) and centralisation of the nervous system provided organisms with a greater clarity of perception, a higher-resolution mental ‘picture’ of the environment, which in turn enables more precise operation within that environment.

This is what creates awareness of the environment – it's a feedback loop between sensory input and output, and conscious experience lies at the centre.

But it is not the whole picture. Pure experience of the world is not adaptive by itself. It is only when it is combined by an understanding of what these sensory inputs mean that the system becomes adaptive. This requires consciousness to have the ability to categorise the external world. Rather than merely being able to detect light – as the sunflower – a conscious animal must be able to infer the meaning behind that light.

And it does this through another fundamental feature of the nervous system – memory.

Memory

This is the second core component of consciousness that the nervous system enables – the ability not just to react the environment, and not even just to experience it, but to learn from it.

Memory is the ability to retain information about prior experiences and enables the organism to learn from them when navigating future experiences. This is as transformational to survival as the leap from single-celled to multicellular organisms. Not just the ability to move, or to direct one’s own movement, but to direct the movement in a way which is advantageous. Not just to respond to sunlight, but to remember what the sunlight means for interacting with your environment.

Memory enables animals to learn about the environment, and it is the nervous system which allows the encoding of memories by the formation and strengthening of the connections between individual nerve cells (synapses). There is a famous maxim in neuroscience that goes “neurons that fire together, wire together”. This means that when neurons – nerve cells – are activated at the same time, perhaps in response to an environmental stimulus, they are more likely to fire together in future. This is called Hebbian learning, and it’s one of the ways that the nervous system stores memories and learns about the environment; this principle is also used when developing artificial neural networks (AI).

This process of memory encoding and learning within the nervous system gives rise to the experience of memory and learning within the animal’s stream of consciousness – this then influences future behaviour in response to changes in the environment. This is such a monumental step forward in terms of survivability that it paved the way for the evolution of the entire animal kingdom.

Why are animals so successful? Because they can learn.

And this is a property conferred upon them by even the most basic nervous system, simply due to the properties of neurons. A study, published last year in Current Biology, found that box jellyfish demonstrate associative learning, remembering their environment so that they can adjust their behaviour to better navigate it.

With 24 primitive eyes, a sense of touch, a gravity-sensing organ, and merely 1000 neurons, the box jellyfish in a laboratory tank were able to learn how to avoid collisions with visual obstacles within just a few minutes.

There is something that it is like to be the jellyfish, learning about its perceived environment by bumping into the walls of the tank.

Consciousness as a behavioural drive

Everything else that is added into the gradient of consciousness beyond this core of perception, memory, and coordination just builds additional layers and expands upon the boundaries of what the consciousness can possibly experience.

Sensing the environment evolved to include smell, hearing, more sensitive degrees of touch and sight – all enabling a higher-resolution and more precise representation of the outside world to be perceived.

The ability to sense internal sensations – interoception – enabled responding to bodily signals by creating the conscious experience of hunger to drive behaviour towards seeking food. The experiences of pain and pleasure developed to bias behaviour to avoid harmful activities and seek out those like eating and having sex which were beneficial to the reproductive success of the organism.

It's worth drawing a comparison here with AI – consciousness is the equivalent to the “black box” in artificial neural networks. When developing an artificial neural network (like ChatGPT), you have an input (a text query) and an output (ChatGPT’s response), and the model is refined and trained based on the relationship between the input and its output. The bit that happens in between is difficult to understand and, indeed, understanding it is unnecessary – so long as the output is useful. In exactly the same way, evolution doesn’t care about the bit in the middle of biological neural networks – it only cares about the input (the selective pressures acting upon the organism from the environment) and the output (the behaviour of the organism in response to the environment).

Organisms which exhibit adaptive behaviour in response to their environment pass on their genes to the next generation, and if these genes are capable of encoding the behavioural response in the nervous system of the offspring, then the adaptive behaviour proliferates down the gene line. The “black box” in biological neural networks is consciousness – evolution doesn’t care how consciousness affects behaviour; evolution only cares that consciousness does affect behaviour. And the way consciousness creates most behaviour is through emotions – filters which alter an animal’s conscious experience to bias behaviour towards a certain outcome. Under this definition, I would include hunger, thirst, and tiredness as emotions as well – I believe that you cannot have a definition of emotion which does not include consciousness (though the Wikipedia article on emotions barely mentions the word at all).

More complex emotions evolved to bias the organism towards other adaptive behaviours. The consciously experienced emotion of fear biases behaviour to flee and hide from the source of fear (flight). The consciously experienced emotion of anger biases behaviour towards aggression – to fight. The consciously experienced emotion of disgust biases behaviour to avoid the source of disgust. And animals which experienced these emotions in response to appropriate stimuli (fear of predators, anger at sexual rivals) were more likely to survive to pass on the genes which encoded those emotions within the nervous system – and therefore the associated behaviours – to the next generation.

The most compelling modern example of passing on behaviour from one generation to the next is dog breeding – since their first domestication 30,000 years ago, wolves were selected to exhibit more juvenile, friendly behaviour – resulting in our beloved canine companions (I write this with a Dachshund asleep on my lap). Dog breeds exist because certain behaviours (tracking, chasing, attention-seeking) were selected for in the breeding process.

Emotions even act as a filter on memory, with memories accompanied by more intense emotions being encoded more strongly, to ensure that experiences which are notable for the creature’s survival or reproductive success are more likely to guide future behaviour. This is why, even for humans, memories of warm, sunny holidays or unpleasant, distressing experiences linger much longer than mundane day-to-day drudgery.

Consciousness enables the development of much more complex behaviour than is found in sedentary organisms like plants or sponges. Once those ancient oceans filled with conscious creatures, for the first time in the history of life the greatest selective pressure acting on any individual organism was how it interacted with other conscious beings.

Consciousness as a selective pressure

Nervous systems – and the consciousnesses contained within them – continued to develop, eventually reaching Cambrian explosion 538.8 million years ago. This is another one of those unanswered mysteries – scientists still debate what caused this explosion in the diversity of life. The leading hypothesis is that a small increase in oxygen levels enabled advanced predation, and advanced predation is perhaps one of the strongest selective pressures imaginable – a continual threat of extinction if not adapted to. Indeed, many of the animals which flourished at this time can be found in the fossil record with resistant, armoured bodies, strongly implying a higher degree of predation in the environment.

But this overlooks something much more fundamental: advanced predation is only possible due to the evolution of consciousness. The basic nervous systems of jellyfish needed to undergo a great degree of increasing complexity and sensitivity before providing the very earliest motile animal predators the capacity to navigate their environment with such precision that they could consume other conscious animals as a reliable source of food.

The Cambrian explosion occurred as a direct consequence of the evolution – and increasing complexity – of consciousness.

And the nervous system – and the conscious experience it creates – has been evolving ever since, with new forms of locomotion, sensory processing, more complex emotions and behavioural drives, reasoning, planning, communication, advanced social behaviour – and even self-awareness.

Artificial Consciousness

At no point in this thought experiment have I assumed that the evolution of the animal nervous system is the only possible way that consciousness can emerge – as mentioned when considering the electrical impulses of fungi, it may exist elsewhere. It may even be possible to construct consciousness artificially, using digital neural networks rather than biological ones, or through other network effects – but the only evidence of consciousness we possess exists as a consequence of the unique properties of the animal nervous system.

Does this then mean that artificial intelligence could already be conscious, as ex-Google engineer Blake Lemoine believes? Is ChatGPT conscious? It’s worth considering – while it may well have a memory of sorts, it currently lacks the crucial perception-action feedback loop which I believe to be a core component of consciousness in animals; AI does not – yet – have the ability to self-direct. For fairly obvious reasons, we are reluctant to build AI models with this capability – warnings like I, Robot and Terminator have fortunately found their way into our collective cultural awareness.

What about robots – like a Boston Dynamics robot? This has a memory AND the ability to adjust its actions based on proprioceptive and visual feedback – does this make it conscious? Is there something that it is like to be a Boston Dynamics robot?

And what about self-driving cars, which also self-direct AND remember? Does their computer-based architecture allow for the existence of a conscious experience based in the patterns of firing of code? Have we created primitive consciousnesses on a par with pre-Cambrian explosion animals?

As you can see, this blurry boundary is beginning to become relevant outside the realm of biology, and outside the realm of animal consciousness. Those pesky ethical questions highlighted in the New York Declaration might not apply only to the meat industry, battery farmed chickens, and pets, but perhaps to your AI personal assistant too.

But until we understand a theory of consciousness in basic animal nervous systems, these questions will remain opaque and difficult to answer.

In conclusion

Does any of this actually help us resolve the mystery of consciousness? To come up with a mathematical theory? The biggest challenge is that consciousness is an emergent property of the nervous system – and studying emergent systems by studying the level beneath is, to put it mildly, quite difficult.

As an example, in quantum physics it is possible to very precisely model a single hydrogen atom, the interaction between a solitary proton and single electron, using a brilliant piece of mathematics called the Schrödinger equation. However, if you try to use this equation to model complex molecules with many more protons, neutrons, and electrons – like sugars and proteins – the mathematical calculation of the Schrödinger equation becomes exponentially complex. This complexity makes it computationally impossible to solve these equations exactly for such large, complex systems – with current technology, mathematically describing a molecule like DNA using the Schrödinger equation would take orders of magnitude longer than the universe. Instead, chemists rely on higher level-level theories and models which, while based on quantum mechanics, are more practical for studying complex systems.

This is true across the field of emergence in mathematics, itself (ironically) an emerging field. Emergence studies how complex systems and patterns arise from simple interactions, using mathematical models to understand behaviours in complex systems not evident from the system’s individual components. Just like how the properties of DNA emerge from the properties of its individual molecular components – or the properties of consciousness emerge from the properties of the animal nervous system.

The emergent properties of consciousness are contained within the patterns of firing of neurons rather than the neurons themselves. Nevertheless, it may be possible to determine the properties of consciousness from the properties of the nervous system through a sophisticated mathematical understanding of emergence.

This may be where the crossover with artificial neural networks can come in useful – given the relative simplicity of cnidarian nerve nets, it may be possible to model these basic nervous systems. Of course, artificially modelling even a single neuron is an incredibly complex task – it took biological evolution more than 3 billion years before the first nerve cells evolved, which is a rather long time to develop complexity.

The key difference, of course, between chemistry and consciousness science is that it is possible to directly observe molecules using technology like X-Ray crystallography and electron microscopes – whereas the observation of any subjective experience other than our own is currently impossible.

But this doesn’t mean we can’t draw conclusions about the conscious experience of other creatures: by practicing radical empathy; by understanding how the development of our own individual brains led to our own conscious experience; by considering whether it was adaptive for any particular animal to experience a particular aspect of consciousness; and by keeping in mind the evolutionary timeline of the nervous system.

This, I hope, is where making the assumption that consciousness is an emergent property of the animal nervous system might come in useful. Because, if true, then modelling primate consciousness before we’ve modelled jellyfish consciousness is like trying to understand the complex counterpoints and variations of Johann Sebastian Bach’s unfinished “The Art of Fugue” before learning the concepts of rhythm and melody in “Twinkle, Twinkle, Little Star”. Like trying to land a human on Mars before the invention of powered flight.

So, there’s my call to action: if you really want to understand consciousness, stop studying the most complex examples of it – humans – and start studying jellyfish.

If you liked this article, please consider subscribing to Everything Evolves:

And I encourage you to leave a comment to share your thoughts:

Consciousness comes from the Cambrian — what an alliterative climax! Thanks for exploring this as a narrative (of evolution); it makes this much easier to reason about.

Have you read Ogas’ and Gaddam’s book, “The Journey of the Mind”? I need to re-read it, but I recall them drawing the consciousness line at fish (because of something cool and self-referential about their neural representations). Also, do you think that Hoel’s take that consciousness is graded?